Session Abstract

Last couple of years AI has entered out lives and jobs - and it’s clearly here to stay. But before we fully hand over everything over to the machines, let’s take a step back and actually understand what’s going on under the hood. What are tokens and embeddings? What do transformers really do (besides being in disguise)? What are these vectors showing up everywhere? We’re not drawing anything, right? In this session, we’ll unpack the core concepts behind large language models: things like training, parameters, vector search, and more. If you’ve ever used an LLM and thought, “Wait… how does this actually work?” - this talk is for you.

Note to event organizers

This session is about the inner workings of AI. What caused AI to take a huge leap a few years ago. What's happening under the hood?

Christian Peeters

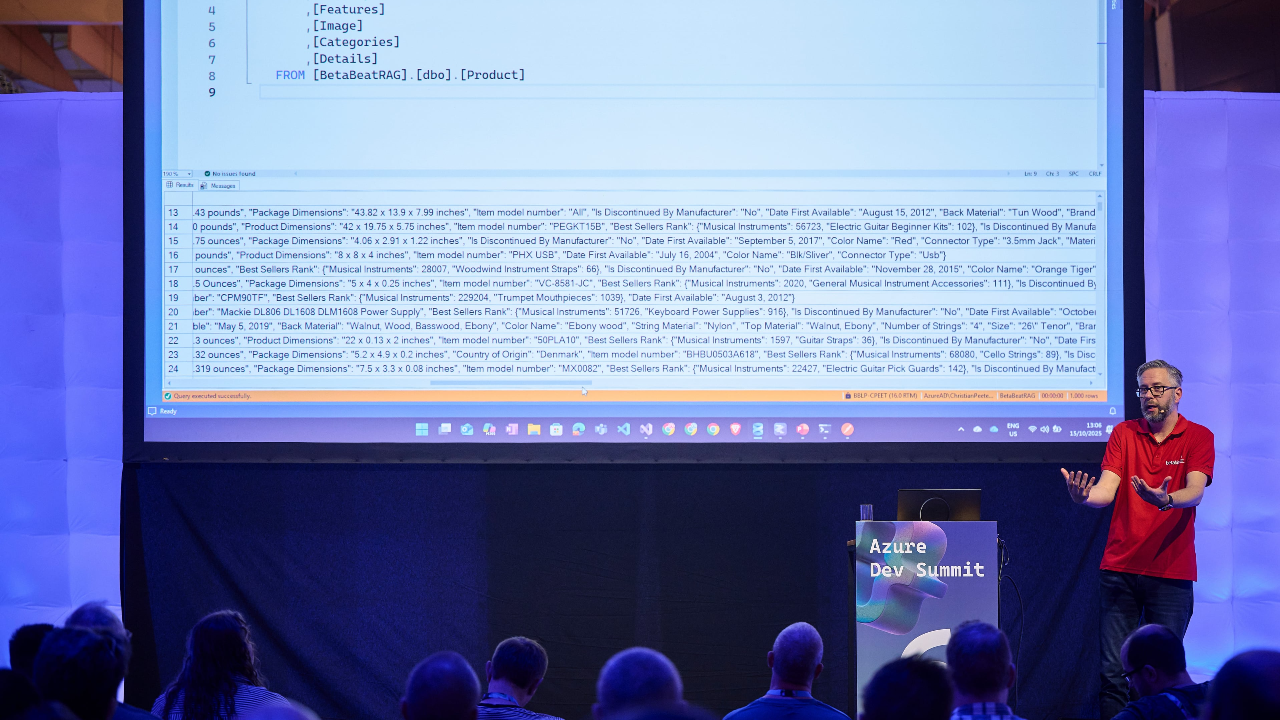

Christian started in 2000 as a software developer. Seven years later he became a Microsoft Certified Trainer and has been teaching other developers ever since. Currently he is a Principal Cloud Architect at Betabit. A software development company specialized in building business critical applications in the cloud.

Christian can deliver sessions in

Christian speaks about

Relevant industries