Session Abstract

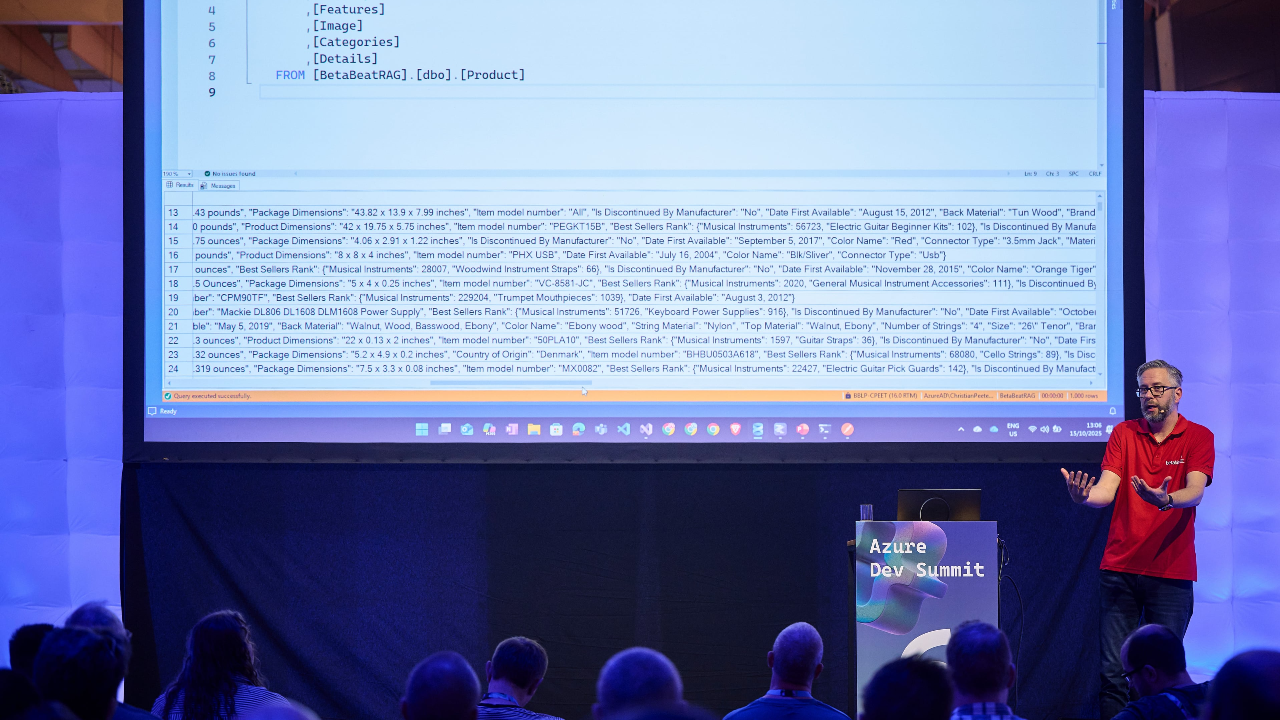

Concerned about data privacy or compliance? Don’t want your sensitive information sent to external AI systems? This session is for you! We’ll explore retrieval-augmented generation (RAG), a method to enhance large language models (LLMs) with your specific, ever-changing data, without it ever leaving your systems. You’ll learn: What is a vector database? How can you vectorize your own data? How to integrate your existing data sources, like SQL databases, to enrich AI interactions. We’ll implement RAG step-by-step, running AI models locally (on the “edge”) while maintaining full control over your data. By the end of the session, you’ll know how to build an AI assistant that uses your private and volatile data, all without relying on external cloud services. Or isn't the cloud a scary thing? We rely on Azure for all our workloads, so let's also take a look on what the cloud can add to this equation.

Note to event organizers

The original session has a focus on running everything local, but can easily adapted to running in the Azure Cloud.

Christian Peeters

Christian started in 2000 as a software developer. Seven years later he became a Microsoft Certified Trainer and has been teaching other developers ever since. Currently he is a Principal Cloud Architect at Betabit. A software development company specialized in building business critical applications in the cloud.

Christian can deliver sessions in

Christian speaks about

Relevant industries